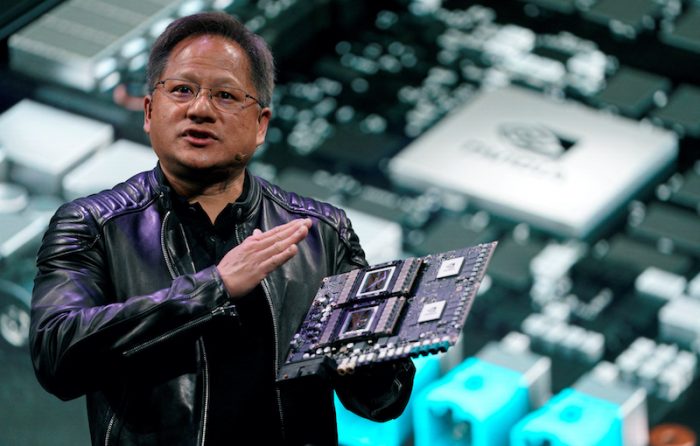

Nvidia chief executive Jensen Huang is expected to reveal fresh details about the group’s new artificial intelligence chip at its annual software developer conference on Tuesday.

Nvidia is trying to introduce a flagship chip every year, but has so far hit both internal and external obstacles.

Late last year Huang gave a hint that the company’s new flagship offering would be named Rubin and that it would consist of a family of chips – a graphics processing unit, a central processing unit and networking chips.

ALSO SEE: China’s Baidu Launches Two Free AI Models to Rival DeepSeek

Those chips – designed to work in huge data centres that train AI systems – are expected to go into production this year and roll out in high volumes in 2026.

The company’s current flagship chip, called Blackwell, is coming to market slower than expected after a design flaw caused manufacturing problems.

The broader AI industry last year grappled with delays in which the prior methods of feeding expanding troves of data into ever-larger data centres full of Nvidia chips had started to show diminishing returns.

$130bn of data centre chips sold in 2024

Over the past three years, Nvidia stock has more than quadrupled in value as the company powered the rise of advanced AI systems such as ChatGPT, Claude and many others.

Much of that success stemmed from the decade that the Santa Clara, California-based company spent building software tools to woo AI researchers and developers, but it was Nvidia’s data centre chips, which sell for tens of thousands of dollars each, that accounted for the bulk of its $130.5 billion in sales last year.

Nvidia shares tumbled this year when Chinese startup DeepSeek alleged it could produce a competitive AI chatbot with far less computing power – and thus fewer Nvidia chips – than earlier generations of the model.

Huang has fired back that newer AI models that spend more time thinking through their answers will make Nvidia’s chips even more important, because they are the fastest at generating “tokens,” the fundamental unit of AI programmes.

“When ChatGPT first came out, the token generation rate only had to be about as fast as you can read,” Huang told Reuters last month.

“However, the token generation rate now is how fast the AI can read itself, because it’s thinking to itself. And the AI can think to itself a lot faster than you and I can read, and because it has to generate so many future possibilities before it presents the right answer to you.”

- Reuters with additional editing by Jim Pollard

ALSO SEE:

Server Fraud Case in Singapore May be Linked to AI Chips, China

New CEO May Block Intel Split, TSMC’s Plan for JV to Run Fabs

All Eyes on Nvidia Chips Demand Amid Doubts on AI Outlays

DeepSeek Breakthrough or Theft? US Probes ‘AI Data Breach’

Tech Selloff Extends to Japan as DeepSeek Puts Focus on AI Costs

‘AI Breakthrough’ by China’s DeepSeek Rocks US Tech Giants

SoftBank, UAE’s MGX Join $500bn Data Centre Deal With OpenAI

Biden Restricts Access to AI Chips to US Firms And Its Allies

US Rules to Limit Investment in China’s Chips, Quantum and AI