In its first such collaboration with the West on artificial intelligence (AI), China has agreed to work with the United States and Europe to assess and address the risk of ‘catastrophic’ harm from the technology.

The three, along with 26 other signatories signed the “Bletchley Declaration” at the AI Safety Summit in the Britain on Wednesday aimed at charting a safe way forward for AI.

The summit and the declaration come on the heels of warnings from some tech executives, including Elon Musk, and political leaders that the rapid development of AI poses an existential threat to the world.

Also on AF: Big Tech Exaggerating AI’s Threat to Humanity, Expert Says

Those warnings have sparked a race by governments world-over and international institutions to design safeguards and regulation.

“We resolve to work together in an inclusive manner to ensure human-centric, trustworthy and responsible AI that is safe, and supports the good of all through existing international fora and other relevant initiatives,” the Bletchley Declaration said.

“Noting the importance of inclusive AI and bridging the digital divide, we reaffirm that international collaboration should endeavour to engage and involve a broad range of partners as appropriate.”

The declaration set out a two-pronged agenda focused on identifying risks of shared concern and building scientific understanding of them, while also developing cross-country policies to mitigate them.

It is a first for Western efforts to manage AI’s safe development.

Wu Zhaohui, China’s vice minister of science and technology joined the US and EU leaders and tech bosses such as Musk and ChatGPT’s Sam Altman at Bletchley Park, home of Britain’s World War Two code-breakers.

Other Asian signatories to the pact included India, Indonesia, Japan, The Philippines, Singapore and South Korea.

Questions on China’s presence

Wu Zhaohui, China’s vice minister of science and technology, told the opening session of the two-day summit that Beijing was ready to increase collaboration on AI safety to help build an international “governance framework”.

“Countries regardless of their size and scale have equal rights to develop and use AI,” he said.

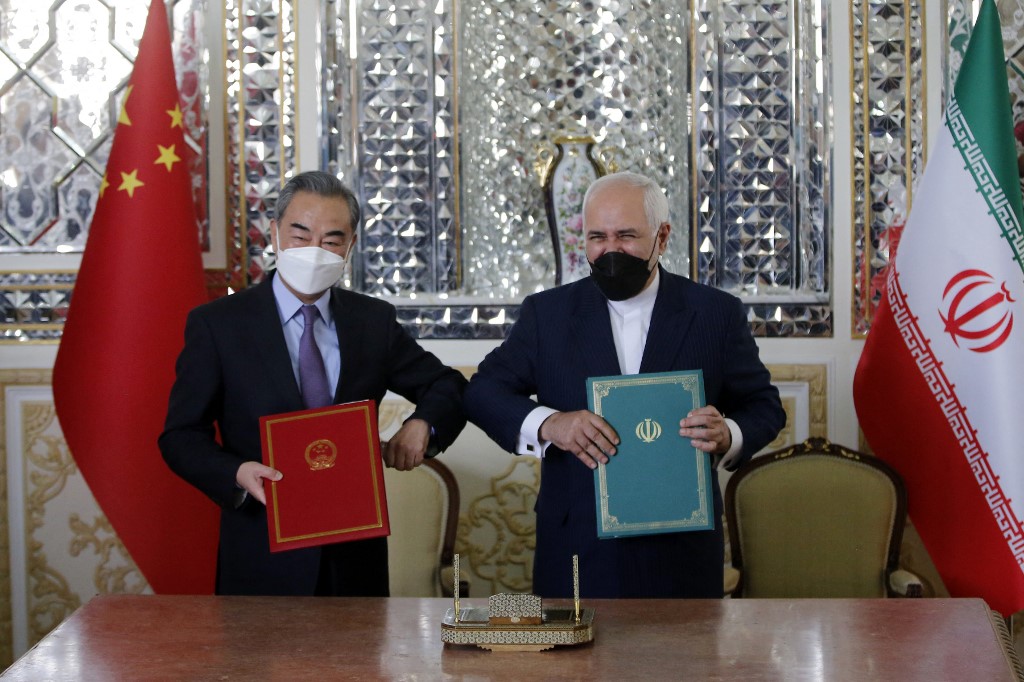

China is a key participant at the summit, given the country’s role in developing AI. But some British lawmakers have questioned whether it should be there given the low level of trust between Beijing, Washington and many European capitals when it comes to Chinese involvement in technology.

The United States made clear on the eve of the summit that the call to Beijing had very much come from Britain

“This is the UK invitation, this is not the US,” American ambassador to London Jane Hartley said.

US Vice President Kamala Harris was in attendance at the summit used the British summit to announce the launch of a US AI Safety Institute. The announcement came just days after US President Joe Biden signed an executive order on AI on Monday.

View this post on Instagram

ChatGPT-fuelled fears

While the European Union has focused its AI oversight on data privacy and surveillance and their potential impact on human rights, the British summit is looking at so-called existential risks from highly capable general-purpose models called “frontier AI”.

“We are especially concerned by such risks in domains such as cybersecurity and biotechnology, as well as where frontier AI systems may amplify risks such as disinformation. There is potential for serious, even catastrophic, harm, either deliberate or unintentional, stemming from the most significant capabilities of these AI models,” the Bletchley Declaration says.

Mustafa Suleyman, the cofounder of Google Deepmind, told reporters he did not think current AI frontier models posed any “significant catastrophic harms”.

He said, however, it made sense to plan ahead as the industry trains ever larger models.

Fears about the impact AI could have on economies and society took off in November last year when Microsoft-backed OpenAI made ChatGPT available to the public.

Using natural language processing tools to create human-like dialogue, it has stoked fears, including among some AI pioneers, that machines could in time achieve greater intelligence than humans, leading to unlimited, unintended consequences.

Next summit in South Korea

Governments and officials are now trying to chart a way forward alongside AI companies which fear being weighed down by regulation before the technology reaches its full potential.

“I don’t know what necessarily the fair rules are, but you’ve got to start with insight before you do oversight,” tech billionaire Musk told reporters at the summit.

A “third-party referee” could be used to sound the alarm when risks develop, he added.

Meanwhile, British digital minister Michelle Donelan said it was an achievement just to get so many key players in one room. She announced two further AI Safety Summits, one to be held in South Korea in six months and another in France six months after that.

“For the first time, we now have countries agreeing that we need to look not just independently but collectively at the risk around frontier AI,” Donelan told reporters.

- Reuters, with additional inputs from Vishakha Saxena

Also read:

G7 Agree AI Code of Conduct to Limit Tech Threat Risks

Beijing Unveils Sweeping Laws to Regulate ChatGPT-Like AI Tech

China Wants To ‘Integrate’ ChatGPT-Like Tech in its Economy

Foxconn, Nvidia to Build ‘AI Factories’ Producing Intelligence

Western Spy Chiefs Warn China Using AI to Steal Tech Secrets

OpenAI Boss Urges Regulations to Prevent ‘Harm to the World’

$600,000 ‘Deepfake’ Fraud Heats Up AI Debate in China