Chinese state-backed hackers have been experimenting with OpenAI’s generative artificial intelligence (AI) tools to gain information on their rivals, the technology firm’s key backer Microsoft has said.

The US software giant said state-backed hackers from North Korea, Iran and Russia had also been using OpenAI’s tools to hone their skills and trick their targets.

The company announced the finding as it rolled out a blanket ban on state-backed hacking groups using its AI products on Wednesday.

Also on AF: Russians Get Chinese Help to Make Citroen EVs at Stellantis Plant

“Independent of whether there’s any violation of the law or any violation of terms of service, we just don’t want those actors that we’ve identified – that we track and know are threat actors of various kinds – we don’t want them to have access to this technology,” Microsoft Vice President for Customer Security Tom Burt said.

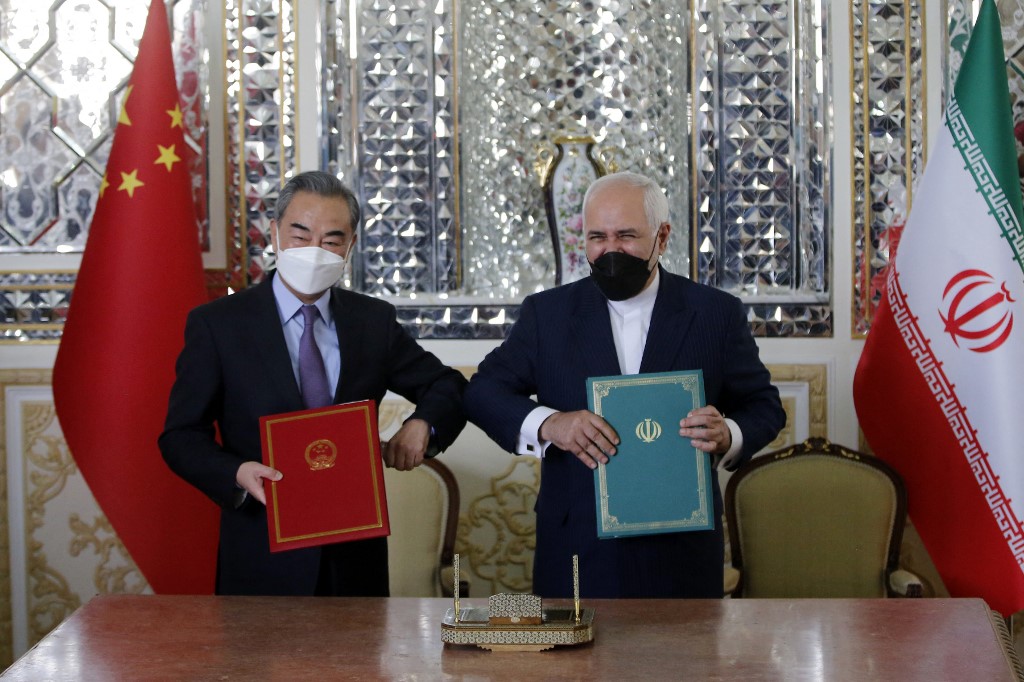

In a blog post, Microsoft said it had tracked hacking groups affiliated with Russian military intelligence, Iran’s Revolutionary Guard, and the Chinese and North Korean governments using large language models (LLMs).

“We really saw them just using this technology like any other user,” Microsoft’s Burt said.

Chinese state-backed hackers used LLMs to seek information on “global intelligence agencies, domestic concerns, notable individuals, cybersecurity matters, topics of strategic interest, and various threat actors,” Microsoft noted in its blog.

They also used LLMs to develop code “with potential malicious intent” and translate computing terms and technical papers, the tech giant said.

China’s US embassy spokesperson Liu Pengyu said it opposed “groundless smears and accusations against China” and advocated for the “safe, reliable and controllable” deployment of AI technology to “enhance the common well-being of all mankind.”

Russia hackers focus on Ukraine; North Korea on phishing

The allegation that state-backed hackers have been caught using AI tools to help boost their spying capabilities is likely to underline concerns about the rapid proliferation of the technology and its potential for abuse.

Senior cybersecurity officials in the West have been warning since last year that rogue actors were abusing such tools, although specifics have, until now, been thin on the ground.

“This is one of the first, if not the first, instances of a AI company coming out and discussing publicly how cybersecurity threat actors use AI technologies,” said Bob Rotsted, who leads cybersecurity threat intelligence at OpenAI.

OpenAI and Microsoft described the hackers’ use of their AI tools as “early-stage” and “incremental.” Burt said neither had seen cyber spies make any breakthroughs.

Hackers alleged to working on behalf of Russia military spy agency, widely known as the GRU, used the models to research “various satellite and radar technologies that may pertain to conventional military operations in Ukraine,” Microsoft said.

North Korean hackers, meanwhile, used the models to generate content “that would likely be for use in spear-phishing campaigns” against regional experts, the firm said.

Iran hackers targeted feminists

Iranian hackers also leaned on the models to write more convincing emails, Microsoft said, at one point using them to draft a message attempting to lure “prominent feminists” to a booby-trapped website.

They also tried to use large language models to develop code to evade detection.

Microsoft raised the alarm on Iranian hackers’ use of AI earlier this month too.

In a blog last week, the tech firm noted that state-backed Iranian hackers had, in December, succeeded in interrupting streaming television services and replacing them with “a fake news video featuring an apparently AI-generated news anchor.”

France24 reported on Tuesday that affected services included British public broadcaster BBC and “a host of other European TV streaming services.”

Microsoft said its objective in releasing the report was “to ensure the safe and responsible use of AI technologies like ChatGPT.”

But neither Burt nor Rotsted commented on the volume of activity or how many accounts had been suspended.

Burt, meanwhile, defended the zero-tolerance ban on hacking groups – which doesn’t extend to Microsoft offerings such as its search engine, Bing – by pointing to the novelty of AI and the concern over its deployment.

“This technology is both new and incredibly powerful,” he said.

- Reuters, with additional inputs from Vishakha Saxena

Also read:

US Cleared Chinese Hackers From Pacific Computer Systems

Chinese Hackers ‘Spying on Critical US Services, Guam’

US Says China’s State Hackers Breached Government Emails

US, China Out to Stop Quantum Computers Stealing World’s Secrets

North Korea Hackers Targeted Crypto in US Tech Firm Attack

North Korea Hackers Stole Crypto Worth $721 Million From Japan

US, Japan Warn of New China Hacker After ‘60,000 Emails Stolen’

China is Using AI to Ramp up Espionage, US Says – WSJ

Chinese Hackers Stole ‘Trillions’ in IP Secrets – CBS

US, China Experts Held Secret AI Safeguarding Talks – FT

China Says US ‘Repeatedly, Systematically’ Hacked Huawei – SCMP