Concern is rising about unrestrained use of generative artificial intelligence (AI) systems.

In an era of intense geopolitical rivalry among the world’s most powerful nations, fears have been raised about the threat of ‘rogue’ use of AI – in wars and elections, via deepfake technology and ‘influence operations’ already being waged on social media systems.

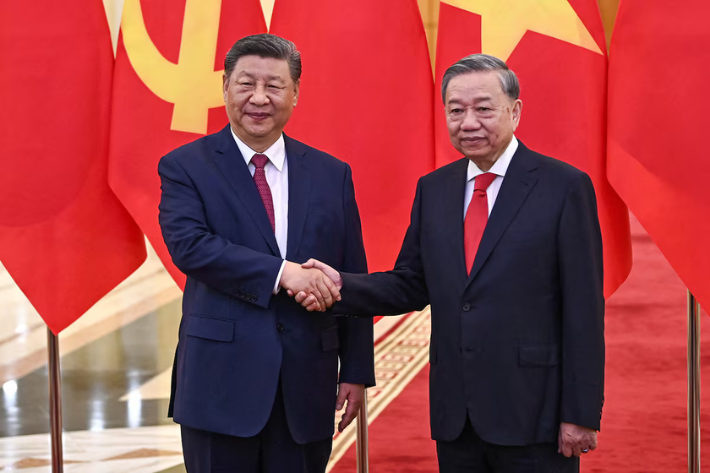

World leaders have sought to limit the threat of AI with codes of conduct discussed at global summits, but there are signs that more needs to be done, in countries across Asia and the Global South still coming to grips with the downsides of the latest tech boom.

ALSO SEE: Singapore Tightens Money Laundering Rules After $2.2bn Scandal

Companies in Japan have been the latest to raise the alarm about this issue, saying they fear democracy and social order could collapse if AI is left unchecked.

Japan’s Yomiuri Shimbun Group and the country’s biggest telecom group, Nippon Telegraph and Telephone (NTT), called on Monday for the quick passing of a law to restrain generative AI in a proposal which warned that AI tools had already begun to damage human dignity because they seize users’ attention without regard to morals or accuracy, as the Wall Street Journal noted on Monday.

Japan needed to pass legislation immediately, the proposal said, because the threat of unrestrained AI was so great – in a “worst-case scenario, democracy and social order could collapse, resulting in wars.”

The Biden administration in the US, Britain and the European Union have been leading a global push to regulate AI. The EU law calls on companies developing AI systems to notify regulators of serious incidents.

Israel used AI system in Gaza, sources claim

Meanwhile, claims have emerged that the Israeli army used a ‘rogue’ artificial intelligence software programme to select targets for its brutal offensive against Hamas in Gaza.

Israeli intelligence sources have said the military’s bombing campaign, which has killed tens of thousands in Gaza, “used a previously undisclosed AI-database that at one stage identified 37,000 potential targets based on their apparent links to Hamas,” according to a report by The Guardian.

The sources alleged that Israeli military officials permitted large numbers of Palestinian civilians to be killed, particularly during the initial months of the war. Their “candid testimony” showed officials had been using “machine-learning systems to help identify targets” during the six-month war, it said.

“Israel’s use of powerful AI systems in its war on Hamas has entered uncharted territory for advanced warfare, raising a host of legal and moral questions, and transforming the relationship between military personnel and machines,” the report said.

The latest development would perhaps not surprise Eurasia Group founder and author Ian Bremmer, who warned in his book ‘The Power of Crisis’ that disruptive technology poses a greater threat to humanity than climate change.

But not all AI experts agree.

Andrew Ng, one of the founders of Google Brain and a Stanford University professor who taught machine learning to OpenAI co-founder Sam Altman, says large tech companies are exaggerating the risks of AI.

The claim that artificial intelligence could lead to the extinction of humanity was a “bad idea” pushed by Big Tech companies because of their desire to trigger heavy regulation that would reduce competition in the AI sector, Ng said in an interview late last year.

But with big tech companies often seen as doing little to contain financial scams rampant on their systems, is it any wonder, these fears arise?

So, be warned: This debate is only just heating up.

- Jim Pollard

ALSO SEE:

China Ramps Up AI Push, Eyes $1.4tn Industry By 2030 – Xinhua

AI Chiefs Say Deepfakes a Threat to World, Call For Regulation

Generative AI Seen Having Big Impacts on Environment – Nature

Fake Chinese Accounts Flourish on X, Analysis Shows – WaPo

Japan Leaders Want Law on Generative AI ‘Within the Year’