Scientists claim to have made a crucial energy-saving breakthrough that should slash the power demands of the burgeoning AI sector, LiveScience reported.

Researchers have developed a new type of memory device that they say could reduce the energy consumption of artificial intelligence by at least a 1,000 times, the story continued.

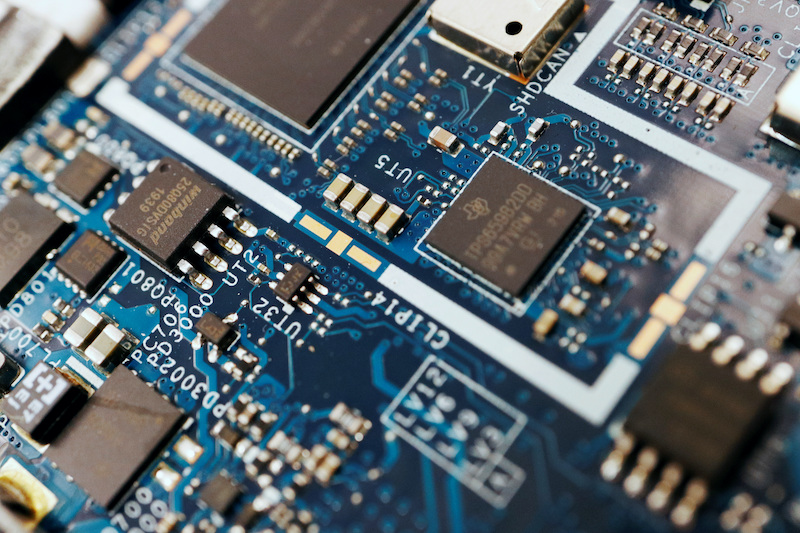

Called computational random-access memory (CRAM), the new device performs computations directly within its memory cells, eliminating the need to transfer data across different parts of a computer.

According to figures from the International Energy Agency, the report went on, global energy consumption for AI could double from 460 terawatt-hours (TWh) in 2022 to 1,000 TWh in 2026 – equivalent to Japan’s total electricity consumption.

Read the full story: LiveScience

- By Sean O’Meara

Also on AF:

Samsung Posts 15-Fold Profit Surge on AI Chips Frenzy

‘Dire Risks’ of Nvidia AI Dominance Sparks US Probe Call

OpenAI’s ‘$8.5 Billion Bills’ Report Sparks Bankruptcy Speculation

Qualcomm Rises on Surge in Demand for AI Chips in China